Tags: Adaptive Learning, Assessment, Canvas, MasteryPaths, Peer-Reviewed Entry, Placement Testing

Description

Digital technologies are widely used in language teaching and testing (Finnerty, 2015; Meunier, 1994). The adaptive testing technologies especially offer rigor and convenience in language assessment (Rasskazova et al., 2017). The Canvas MasteryPaths feature allows instructors to easily adapt learning contents and assessments based on students’ performance.

During UCF Global’s development of our online English program, we faced a challenge in regards to placement testing. Like a traditional face-to-face English Program for international students, we needed a reliable and valid tool to accurately place students into appropriate level of instruction. However, we also needed the assessment to be adaptive. With a wide range of language skills, we needed to ensure students with limited skills did not feel overburdened by content above their level. Furthermore, we needed to make sure that students who were well-versed in English didn’t feel that the assessment was too far below their level. How could we do this in an online environment, and more specifically within our learning management system (Canvas)? After much research, we designed an assessment that is adaptive in Canvas by using the MasteryPaths functionality.

What is the MasteryPaths and how did we apply it? MasteryPaths allows you to design an assessment or an assignment where all students begin at the same place. Then, based on their performance on that assessment, you can branch them in different directions. If students are successful, they move in one direction, if students are not as successful, in another, and students who really struggle, move in yet another direction. By employing MasteryPaths, we created an adaptive, branched exam that allowed students to demonstrate their linguistic knowledge without the burden of overtesting or undertesting.

Link to example artifact(s)

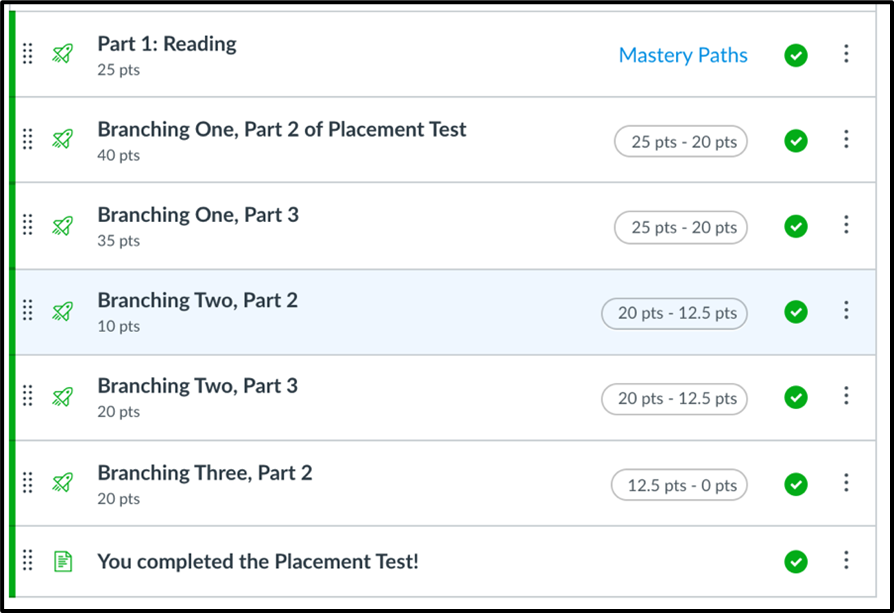

During the week of orientation in our online English program, first-year international students worked through the orientation course, and then were given instructions on the placement exam. For a student, the branching is behind the scenes. In other words, they were unaware that the test questions were adjusting to their language skills. Figure 1 shows an instructor’s view of the placement exam. All students first complete the reading test. Based on the results of the reading test, students are branched into three different paths.

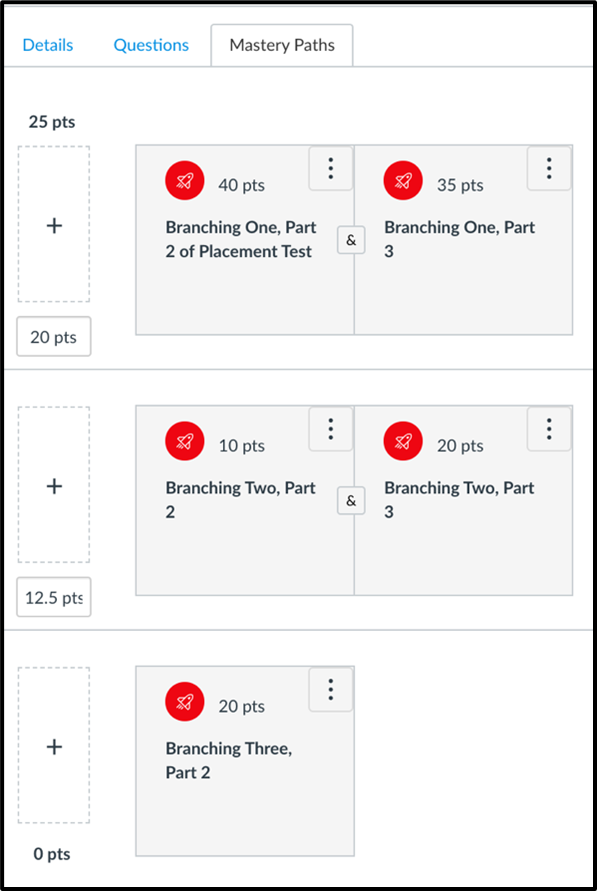

Figure 2 shows the detailed criteria that directed students into three paths. Students who achieved 20 or higher in the reading test would take both a full grammar (Branching One, Part 2) and a writing test (Branching One, Part 3). Students who achieved 12.5-20 in the reading test would take a grammar (Branching Two, Part 2) and a listening test (Branching Two, Part 3). Students who achieved below 12.5 would take an easier version of the grammar test (Branching Three, Part 2). They would not see the other branching paths. Once a student completed the placement, the instructors received a score that allowed us to easily place them into the right English curriculum.

The Canvas MasteryPaths can be used not only in language testing but in all other disciplines. This is an easy way to adapt assessments and learning contents and deliver personalized question items to students at their own level.

Detailed instruction of the feature can be found in the Canvas Guides at: https://community.canvaslms.com/t5/Instructor-Guide/How-do-I-use-MasteryPaths-in-course-modules/ta-p/906 .

Such an adaptive, branched exam can possibly be duplicated in other systems that have a conditional feature. Or an instructor can simply divide a comprehensive examination into several parts and manually assign students into branches of the exam based on section results.

Link to scholarly reference(s)

Finnerty, C. (2015). The CAT is out of the bag. Modern English Teacher, 24(3), 15–17.

Meunier, L. E. (1994). Computer Adaptive Language Tests (CALT) Offer a Great Potential for Functional Testing. Yet Why Don’t They? CALICO Journal, 11(4), 23–39.

Rasskazova, T., Muzafarova, A., Daminova, J, & Okhotnikova, A. (2017). Computerised Language Assessment: Limitations and Opportunities. ELearning & Software for Education, 2, 173–180. https://doi.org/10.12753/2066-026X-17-110

Citation

Cavage, C., & Chen, B. (2021). Streamlining placement testing with an adaptive, branched exam. In A. deNoyelles, A. Albrecht, S. Bauer, & S. Wyatt (Eds.), Teaching Online Pedagogical Repository. Orlando, FL: University of Central Florida Center for Distributed Learning. https://topr.online.ucf.edu/streamlining-placement-testing-with-an-adaptive-branched-exam/.Post Revisions:

- June 3, 2021 @ 11:34:23 [Current Revision]

- June 3, 2021 @ 11:34:22